How Recruiters Can Increase Software Engineer Hiring Throughput and Hire the Best 20% in the Pool

Scoring software engineering candidates’ solutions for logic can improve outcomes for candidates, recruiters, and engineering teams. Learn how it can help you find the best developers and reach your hiring goals.

Share

Nearly every company is software-driven, as a result, they are constantly seeking good developers. Whenever a friend asks me to refer a good developer for his company, I tell them why would I refer to you, I will hire them for my team! But what does having coding skills really mean? What do we look for when we hire developers? Is it an ability to write functionally correct code—those that pass test cases? Nah, not exactly.

What does it really mean to be the “best” developer?

A seasoned interviewer would tell you that there is much more to writing code than passing test cases. For starters, we care more about how well a candidate understands the problem and approaches a solution than their ability to write functionally correct code. “Did the candidate get the logic?” is generally the question discussed among interviewers. We are also interested in seeing whether the candidate’s logic (algorithm) is efficient—with low time and space complexity.

We also care about how readable and maintainable a candidate makes their code. Dealing with badly written code that breaks under exceptions and is not amenable to fixes can dramatically slow down software engineering teams whose time is already scarce. So why do most automated assessments base candidate success on the number of test cases passed? They cause false negatives that are often accepted as a sunk cost of the hiring process. But they do not have to be.

How well can AI grade programs?

If AI can drive cars automatically these days, can it not evaluate programs like humans? It can. In our KDD paper, we showed that by using machine learning (ML), we could evaluate and score programs on multiple parameters as well as humans do. The correlations between the machine’s ratings and those of human experts were as high as 0.8-0.9.

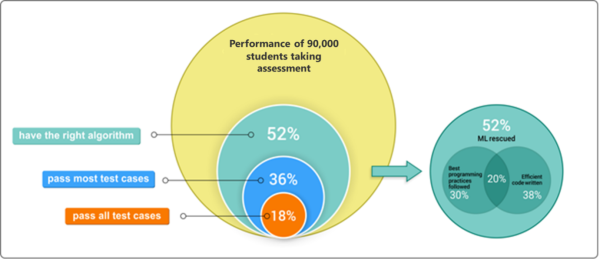

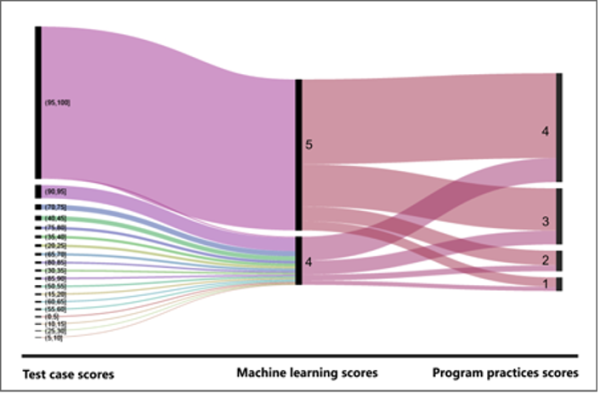

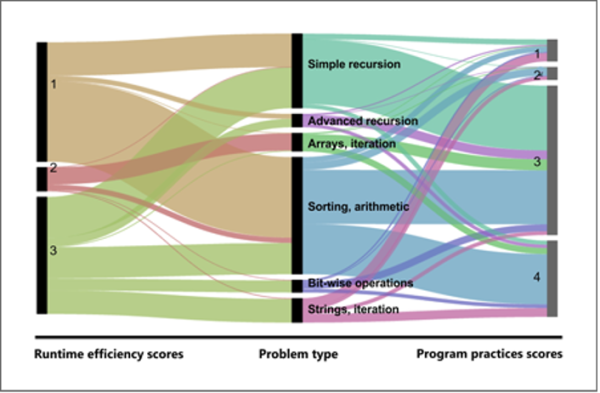

Why is this useful? We looked at a sample of 90,000 US-based candidates whose code was assessed by SHL Technology Hiring. These were seniors graduating with a computer science/engineering degree and were interested in software engineering jobs. They were scored on four metrics—percent test-cases passed, the correctness of logic as detected by our ML algorithm (scored on a scale of 1-5), run-time efficiency (on a scale of 1-3), and best coding practices used (on a scale of 1-4). Scores on the Coding Skill Assessment provide answers to the following critical questions:

- Is the logic/thought process right?

- Is this going to be an efficient code?

- Will it be maintainable and scalable?

"A clever man commits no minor blunders"

– Von Goethe

Typically, companies look for candidates who get the code nearly right. In the study described above, only 36% of the seniors passed 80% of the test cases in the simulation. In a typical recruiting scenario, the remaining 64% would have been rejected and not considered any further. We turned to our machine learning algorithm to see how many of these “left out” had actually written logically correct programs having only minor errors.

We find that another 16% of these were scored 4 or above by our system, which meant they had written codes that had ‘correct control structures and critical data-dependencies but had some minor mistakes’. These folks should have been considered for the remainder of the process! Smart algorithms, like human experts, are able to spot what would be missed by test cases.

We find a lot of these candidates’ codes pass fewer than 50% of test cases (see figure 1). Why would this be happening? We sifted through some examples and found some very interesting ways in which students made errors. For a simulation item in which candidates are asked to remove vowels in a string, a candidate had missed what even the best in the industry fall for at times—incorrect usage of ORs and ANDs with the negation operator. Another had implemented the logic well but made a mistake on the very last line. He lacked the knowledge of converting character arrays back to strings in Java.

The lack of such specific knowledge is typical of those who have not spent enough time with Java, but this is easy to learn and should not cost them their performance in a coding assessment and should not stop them from differentiating themselves from those who could not think of the algorithm.

“The computing scientist’s main challenge is not to get confused by the complexities of his own making“

– E. W. Dijkstra

We identified those who had written nearly correct code; those who could think of algorithms and put them into a programming language. However, was this just some code that works but is not efficient, complex, or maintainable? Furthermore, would it have resulted in all so familiar “still loading” messages we see in our apps and software? We find that roughly 38% of developer candidates thought of an efficient algorithm while writing their solution and 30% wrote code that was acceptable to reside in professional software systems. Together, roughly 20% of students write programs that are both readable and efficient.

Previously, it is known that automated coding simulation includes 36% of candidates that can write codes that can pass test cases and excludes 64% of the candidates in the pool. However, our AI finds that 16% more candidates, who were previously excluded, have good skills. This results in increasing the pool from 36% to 52%. Now, from this 52%, we find that 20% of total candidates follow best practices and can write efficient codes. Thus, the study tells us that there are 20% of developers here whom we should really talk to and hire! If we are ready to train them and run with them a little, there are various other score cuts one could use to make an informed decision.

Interested in keeping more great candidates in the funnel with fair, automated scoring? Book a demo with one of our experts today so we can discuss what you can do to hire the right software engineers, faster.